AMAZING! Robots Taught to Perform Complex Tasks Using AI and Reinforcement Learning!

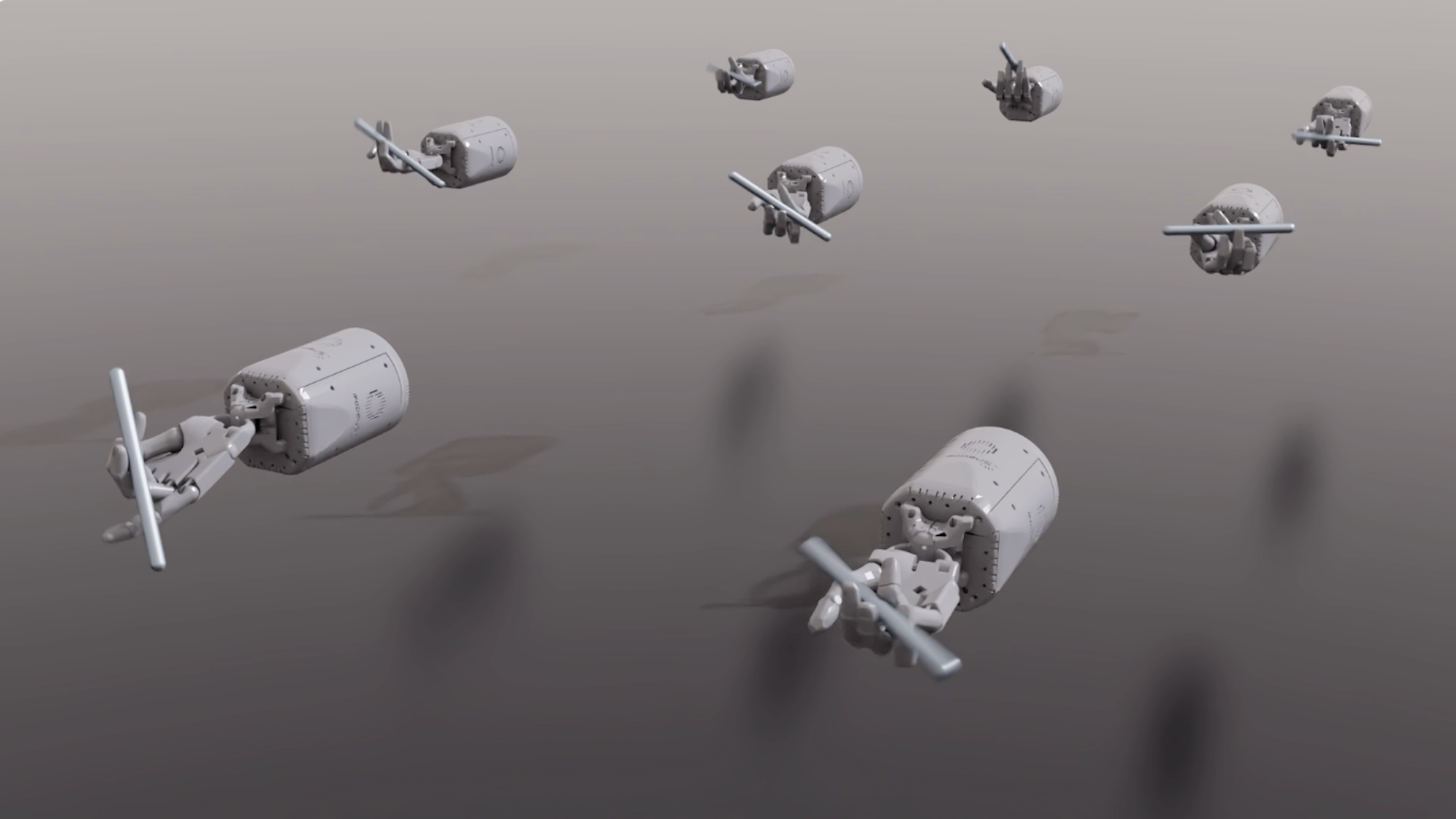

In a ground-breaking experiment, researchers at NVIDIA Research have trained robots to perform nearly 30 complex tasks using the power of artificial intelligence (AI). These robots can now toss a ball, push blocks, and even twirl pens with incredible dexterity.

Using OpenAI’s GPT-4, NVIDIA’s new Eureka “AI agent” developed a unique combination of large language models (LLMs) and GPU-accelerated simulation programming. This AI protocol doesn’t require intricate prompts or pre-written templates; instead, it teaches a simulation of a robotic hand by refining its design based on external human feedback.

According to Linxi “Jim” Fan, a senior research scientist at NVIDIA, Eureka could revolutionize robot control and create physically realistic animations. The results speak for themselves – a Eureka-trained robotic hand can perform pen spinning tricks that rival those of skilled humans.

What’s more, Eureka’s designed reward programs outperformed humans’ code in over 80% of tasks, with an average performance improvement of over 50% in robotic simulations. These AI alternatives to human-written trial-and-error learning programs are not only effective but often superior.

Anima Anandkumar, senior director of AI research at NVIDIA, explained that Eureka is just the first step in developing algorithms that integrate generative and reinforcement learning methods to solve difficult tasks.

This remarkable achievement may pave the way for more efficient training of robots, leading to advancements in automation and AI technology. What else will these incredible robots be capable of? The possibilities are endless!

What do you think of this AI breakthrough? Leave a comment below and let us know your thoughts!

IntelliPrompt curated this article: Read the full story at the original source by clicking here a fun game: sprunki horror